Elizabeth Loftus is one of the world’s most influential psychologists and I have the greatest respect for her and her work. Several years ago we attended the same party and I still recall her charisma and good sense of humor. Also, Elizabeth Loftus studied mathematical psychology in Stanford, and that basically makes us academic family.

But…just as Stanford math psych graduate Rich Shiffrin, Elizabeth Loftus appears less than enthusiastic about the recent turning of the methodological screws. Below is an excerpt from a recent interview for the Dutch journal De Psycholoog (the entire interview can be accessed, in Dutch, here. I back-translated the relevant fragment from Dutch to English:

Vittorio Busato, the interviewer: “What is your opinion on the replication crisis in psychology?”

Elizabeth Loftus: “The many strict, methodological precepts did not make scientific research any more fun. For instance, you would be disallowed from peeking at your data as it is being collected. But how can you be a curious researcher if you can’t check at an early stage whether your research is headed in the predicted direction? Should I really feel guilty when I do this? Nowadays there is much hostility among researchers, some feel harassed because they have to make their data publicly available. Sometimes researchers tell me that they wish to replicate my very first study on the misinformation effect. I just think Really, what’s the point? I don’t believe that single studies are important in psychology. Moreover, hundreds of studies have been done on that effect. Back in the day we used borrowed film material that we returned. Good luck retrieving that!”

There are at least five separate statements in here. When Elizabeth Loftus speaks, the world listens, so it is important to discuss the merits and possible demerits of her claims. So here we go, in reverse order:

Claim 1: Replicating a seminal study is pointless

Here I have to disagree. I believe that we have a duty towards our students to confirm that the work presented in our textbooks is in fact reliable (see also Bakker et al., 2013). Sometimes, even when hundreds of studies have been conducted on a particular phenomenon, the effect turns out to be surprisingly elusive — but only after the methodological screws have been turned. That said, it can be more productive to replicate a later study instead of the original, particularly when that later study removes a confound, is better designed, and is generally accepted as prototypical.

Claim 2: Researchers feel harassed because they have to make their data publicly available

As a signatory of the Peer Reviewers’ Openness Initiative, I would have to disagree here as well. Of course, Elizabeth Loftus may be entirely correct that some researchers feel harassed. In my opinion, this is the same kind of harassment that a child has to endure when it learns to wash its hands after visiting the restroom. Likewise, the feeling of being harassed will quickly fade once sharing data becomes the norm (see also Houtkoop et al., 2018).

Claim 3: Nowadays there is much hostility among researchers

Sudden shifts in research culture are guaranteed to lead to some friction between the old and the new. Researchers not on board with the idea of open science will feel pressured and may sometimes act out; on the other hand, not all who have picked up the open science flag appear to be of sound mind and body.

When behaviorism gave way to the cognitive revolution I’m guessing some behaviorists must have felt more than a little peevish, and some cognitive scientists probably overshot the mark out of misplaced zeal or just because they were obnoxious. Generally speaking, the focus on methodological improvement and collaboration seems to have increased academic camaraderie, especially among early career researchers, who are among the most enthusiastic proponents of open science. Of course the examples of hostility are much more visible than the examples of camaraderie, and this may easily skew one’s overall impression.

Claim 4: I should not feel guilty when I peek at data as it is being collected

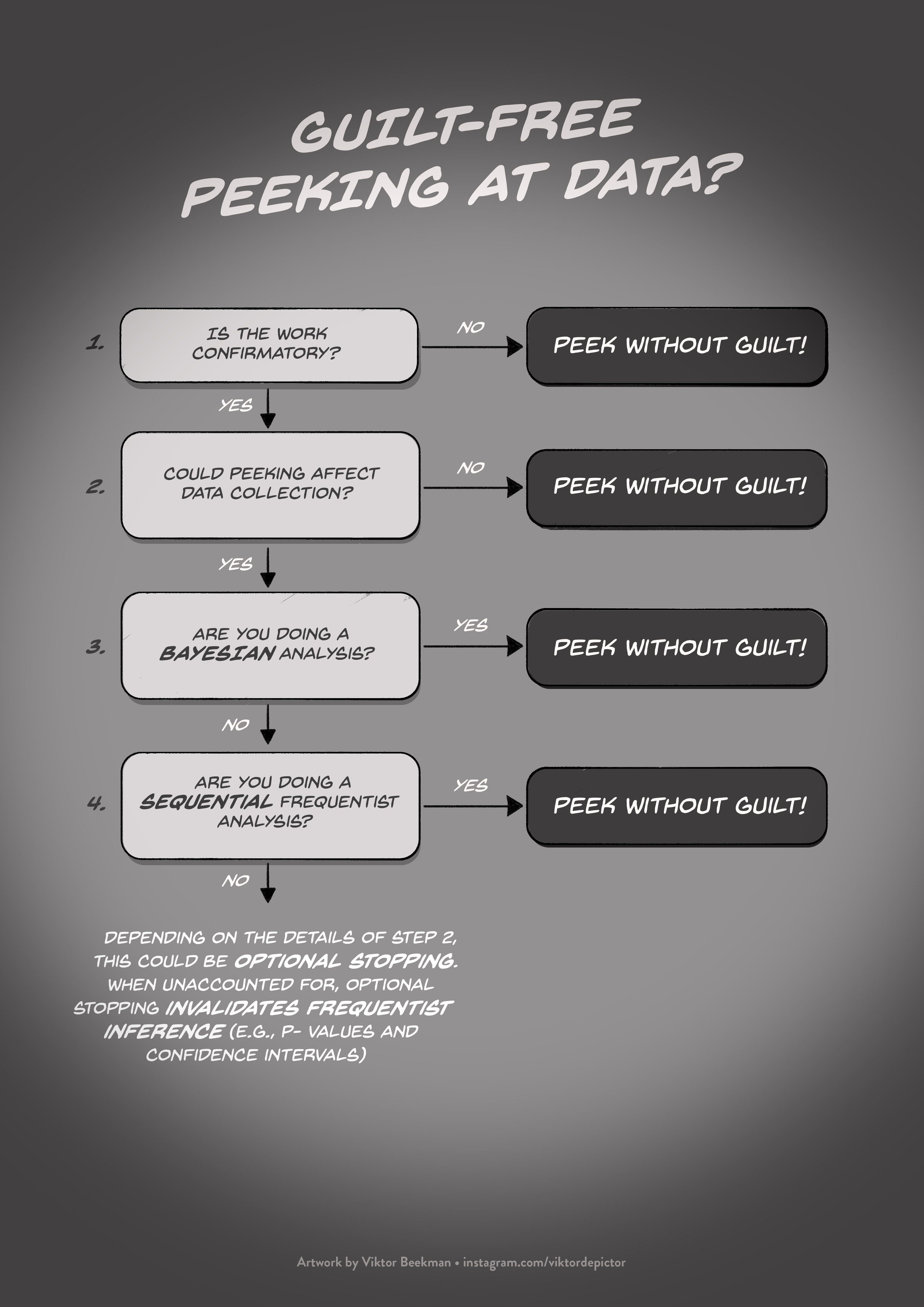

This is the most interesting claim, and one with the largest practical repercussions. I agree with Loftus here. It is perfectly sound methodological practice to peek at data as it is being collected. Specifically, guilt-free peeking is possible if the research is exploratory (and this is made unambiguously clear in the published report). If the research is confirmatory, then peeking is still perfectly acceptable, just as long as the peeking does not influence the sampling plan. But even that is allowed as long as one employs either a frequentist sequential analysis or a Bayesian analysis (e.g., Rouder, 2014; we have a manuscript in preparation that provides five intuitions for this general rule). The only kind of peeking that should cause sleepless nights is when the experiment is designed as a confirmatory test, the peeking affects the sampling plan, the analysis is frequentist, and the sampling plan is disregarded in the analysis and misrepresented in the published report. This unfortunate combination invokes what is known as “sampling to a foregone conclusion”, and it invalidates the reported statistical inference. For those who are still confused, here’s the overview, courtesy of Viktor Beekman:

Claim 5: The many strict, methodological precepts did not make scientific research any more fun

From my own vantage point I think research is much more fun now than it was a decade ago. For many papers that are currently being published, I can access the data for reanalysis and I can have greater trust in the replicability because of preregistration. Multi-lab collaborative efforts provide definitive results quickly, and a renewed interest in methodology creates a climate in which researchers are more and more open to use what Gigerenzer called “statistical thinking instead of statistical rituals”.

Response by Elizabeth Loftus

The response below was slightly paraphrased from Email correspondence and is presented here with Elizabeth Loftus’ consent:

“I love your chart on guilt-free peeking! Thanks for making me feel a bit less guilty.

As for the translation into English of my interview….it is stilted and doesn’t sound like me talking. But I’ll try to respond to one point. The point about the importance of one single study stands out. I heard Linda Smith make that point forcefully at a recent conference. I wouldn’t say that it is entirely “pointless”, but it might be of minimal value if there are lots of conceptual replications.

When you have scores of studies on a topic, how valuable is it to have a massive replication effort devoted to say the very first one? Should we devote tons of research to the very first DRM (Deese, Roediger & McDermott) study now that hundreds have been done? I am not sure this is the best use of collective resources.”

References

Bakker, M., Cramer, A. O. J., Matzke, D., Kievit, R. A., van der Maas, H. L. J., Wagenmakers, E.-J., & Borsboom, D. (2013). Dwelling on the past. European Journal of Personality, 27, 120-121. Open peer commentary on Asendorp et al., “Recommendations for increasing replicability in psychology”. DOI: 10.1002/per.1920

Busato, V. (2019). Interview geheugenonderzoeker Elizabeth Loftus: “Mijn onderzoek heeft me toleranter gemaakt” [Interview memory researcher Elizabeth Loftus: “My research has made me more tolerant”]. De Psycholoog, 54, 26-30. The entire interview is here; the pdf was kindly made available for sharing by Vittorio Busato, editor of De Psycholoog.

Houtkoop, B. L., Chambers, C., Macleod, M., Bishop, D. V. M., Nichols, T. E., & Wagenmakers, E.-J. (2018). Data sharing in psychology: A survey on barriers and preconditions. Advances in Methods and Practices in Psychological Science, 1, 70-85. The Open Access link is here:

Rouder, J. N. (2014). Optional stopping: No problem for Bayesians. Psychonomic Bulletin & Review, 21, 301-308.

About The Authors

Eric-Jan Wagenmakers

Eric-Jan (EJ) Wagenmakers is professor at the Psychological Methods Group at the University of Amsterdam.