Bayes’ rule dictates how new data ![]() update the credibility of competing accounts of the world

update the credibility of competing accounts of the world ![]() . An immediate consequence of the definition of conditional probability, Bayes’ rule is usually presented as follows:

. An immediate consequence of the definition of conditional probability, Bayes’ rule is usually presented as follows:

![]()

The way I mentally check this equation is to take the denominator of the expression on the right-hand side, ![]() , and multiply it with the left side of the equation, so we have

, and multiply it with the left side of the equation, so we have ![]() , which equals

, which equals ![]() by the definition of conditional probability. This is the same as the numerator on the right-hand side of the equation, namely

by the definition of conditional probability. This is the same as the numerator on the right-hand side of the equation, namely ![]() .

.

Below I will present two ways in which students might memorize Bayes’ rule without directly using the law of conditional probability. This will become easier when we give meaning to the abstract symbols ![]() and

and ![]() . In the following we replace

. In the following we replace ![]() with

with ![]() (indexing rival accounts of the world) and we replace

(indexing rival accounts of the world) and we replace ![]() with

with ![]() (observed data), so we are trying to memorize or reconstruct this version of Bayes’ rule:

(observed data), so we are trying to memorize or reconstruct this version of Bayes’ rule:

![]()

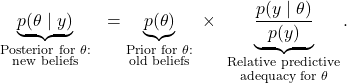

This can be rewritten and interpreted as follows:

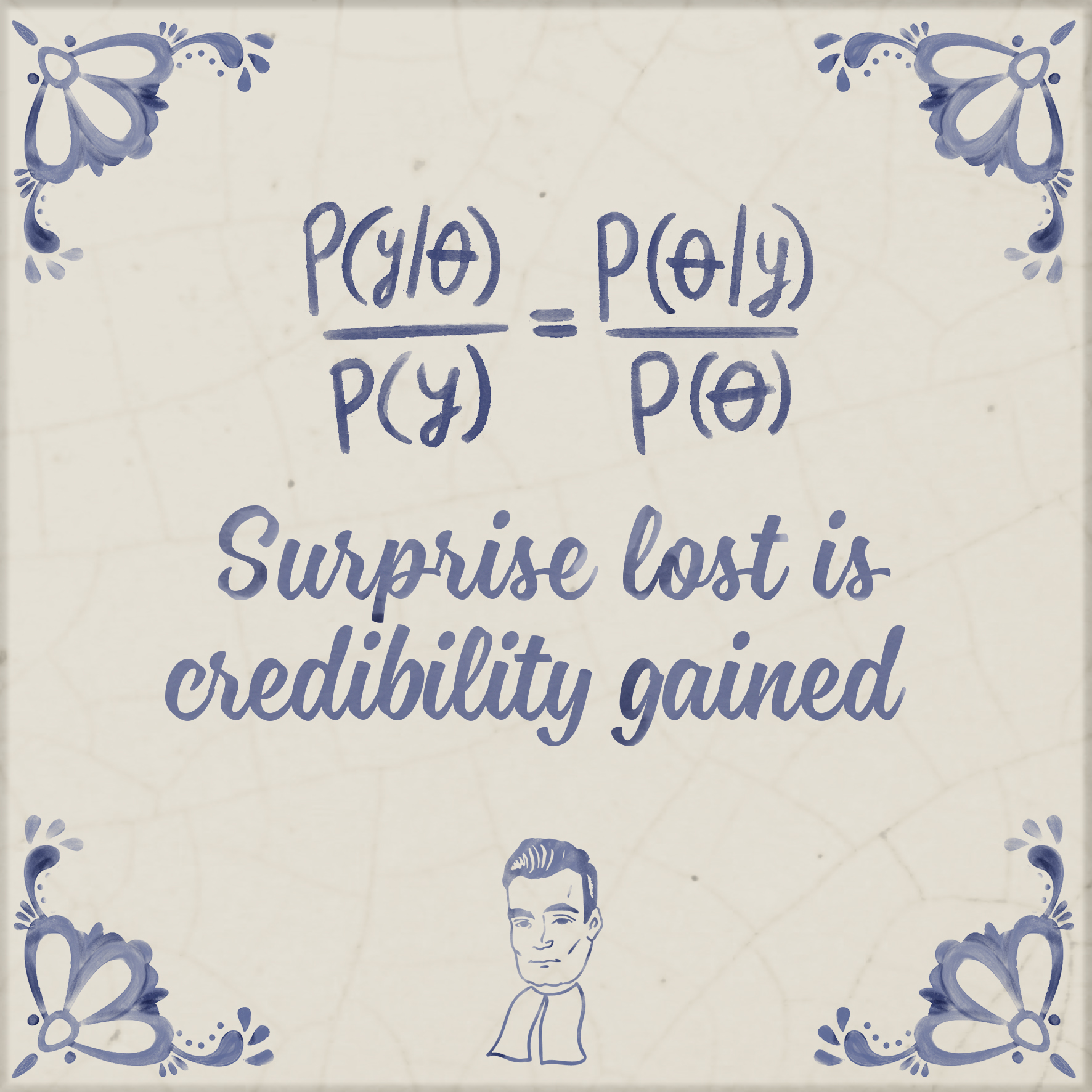

Method 1: Surprise lost is credibility gained

We may take the above equation and divide both sides by ![]() in order to obtain the following expression (cf. Rouder & Morey, 2019; see this blogpost for more detail):

in order to obtain the following expression (cf. Rouder & Morey, 2019; see this blogpost for more detail):

![]()

The left-hand side of the equation shows the change in credibility brought about by taking into account the observed data ![]() ; the right-hand side shows the relative predictive adequacy for

; the right-hand side shows the relative predictive adequacy for ![]() , that is, the change in surprise resulting from conditioning on

, that is, the change in surprise resulting from conditioning on ![]() . When conditioning on a particular hypothesis

. When conditioning on a particular hypothesis ![]() makes the data less surprising, this hypothesis gains credibility: surprise lost is credibility gained. The aspect that makes this equation easy to recall is that the left-hand side is just the same as the right-hand side, but with

makes the data less surprising, this hypothesis gains credibility: surprise lost is credibility gained. The aspect that makes this equation easy to recall is that the left-hand side is just the same as the right-hand side, but with ![]() and

and ![]() switched.

switched.

Tile designed by Viktor Beekman, CC-BY.

Method 2: Conceptual reconstruction

The second method to reproduce Bayes’ rule is based on a number of insights. We start by writing down ![]() , because this is what we want to know: our knowledge of

, because this is what we want to know: our knowledge of ![]() after observing data

after observing data ![]() . We know that this has to involve a change from our knowledge before observing data

. We know that this has to involve a change from our knowledge before observing data ![]() , so we are ready to write

, so we are ready to write ![]() , where

, where ![]() is the updating factor. Now this updating factor consists of two components, and these can be remembered easily from the following considerations. First, we know that

is the updating factor. Now this updating factor consists of two components, and these can be remembered easily from the following considerations. First, we know that ![]() needs to involve a division by

needs to involve a division by ![]() . This is actually right there in the equation: when we write

. This is actually right there in the equation: when we write ![]() , the verical stroke is inspired by the slanted division sign (see the post “The man who rewrote conditional probability” for details). Second, in the numerator of this division there needs to be our final ingredient,

, the verical stroke is inspired by the slanted division sign (see the post “The man who rewrote conditional probability” for details). Second, in the numerator of this division there needs to be our final ingredient, ![]() . The reason why this has to be there is because otherwise Bayes’ rule would not achieve its actual objective, which is to infer something about the possible causes

. The reason why this has to be there is because otherwise Bayes’ rule would not achieve its actual objective, which is to infer something about the possible causes ![]() based on the observed data

based on the observed data ![]() (i.e.,

(i.e., ![]() ) based partly on the inverse information: the predictive adequacy for observed data

) based partly on the inverse information: the predictive adequacy for observed data ![]() based on assumed causes

based on assumed causes ![]() (i.e.,

(i.e., ![]() ).

).

References

Rouder, J. N., & Morey, R. D. (2019). Teaching Bayes’ theorem: Strength of evidence as predictive accuracy. The American Statistician, 73, 186-190.