Here we continue our coverage of the 1974 preface of Bruno de Finetti’s masterpiece “Theory of Probability”, which is missing from the reprint of the 1970 book. Multiple posts are required to cover the entire preface. Below, the use of italics is always as in the original text.

De Finetti’s Preface, Continued [Annotated]

“The numerous, different, opposed attempts to put forward particular points of view which, in the opinion of their supporters, would endow Probability Theory with a ‘nobler’ status, or a ‘more scientific’ character, or ‘firmer’ philosophical or logical foundations, have only served to generate confusion and obscurity, and to provoke well-known polemics and disagreements–even between supporters of essentially the same framework.

The main points of view that have been put forward are as follows.

The classical view, based on physical considerations of symmetry, in which one should be obliged to give the same probability to such ‘symmetric’ cases. But which symmetry? And, in any case, why? The original sentence becomes meaningful if reversed: the symmetry is probabilistically significant, in someone’s opinion, if it leads him to assign the same probabilities to such events.”

This classical view is nicely described by Laplace in his “philosophical essay on probabilities”:

“The theory of chance consists in reducing all the events of the same kind to a certain number of cases equally possible, that is to say, to such as we may be equally undecided about in regard to their existence, and in determining the number of cases favorable to the event whose probability is sought. The ratio of this number to that of all the cases possible is the measure of this probability, which is thus simply a fraction whose numerator is the number of favorable cases and whose denominator is the number of all the cases possible.” (Laplace, 1829/1902, pp. 6-7)

Personally I am not convinced that Laplace’s opinion on probability was dramatically different from that of de Finetti. Laplace was a hard-core determinist and firmly believed that probability was purely a measure of one’s lack of knowledge. In the fragment above, Laplace explicitly states that the “cases equally possible” refer to us being “equally undecided”. And later, on page 8, Laplace provides a simple example of how different background knowledge leads to radically different assessments of probability: “In things which are only probable the difference of the data, which each man has in regard to them, is one of the principal causes of the diversity of opinions which prevail in regard to the same objects.”

We continue with de Finetti’s preface:

“The logical view is similar, but much more superficial and irresponsible inasmuch as it is based on similarities or symmetries which no longer derive from the facts and their actual properties, but merely from the sentences which describe them, and from their formal structure or language.”

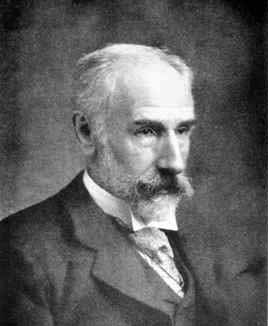

Galavotti (2005) explains that the “logical interpretation” of probability holds that beliefs should be “rational”; probability indicates not what actual beliefs are, but what they ought to be. In contrast, in the subjectivist interpretation the beliefs are actual, and their only requirement is that they are coherent (I find this somewhat contradictory, since real people’s actual beliefs are not fully coherent, but OK). The logical interpretation is associated with people such as de Morgan, Jevons, Keynes, Carnap, and W. E. Johnson. Based on my own reading, de Morgan and Jevons were in close agreement with Laplace, and in their own writings de Morgan and Jevons also made it clear that probability is a reflection of background knowledge. W. E. Johnson was the inspiration to several Bayesians, including Dorothy Wrinch and Harold Jeffreys; as we will see next, Johnson anticipated some of de Finetti’s ideas.

De Finetti continues:

The frequentist (or statistical) view presupposes that one accepts the classical view, in that it considers an event as a class of individual events, the latter being ‘trials’ of the former. The individual events not only have to be ‘equally probable’, but also ‘stochastically independent’…(these notions when applied to individual events are virtually impossible to define or explain in terms of the frequentist interpretation). In this case, also, it is straightforward, by means of the subjective approach, to obtain, under the appropriate conditions, in a perfectly valid manner, the results aimed at (but unattainable) in the statistical formulation. It suffices to make use of the notion of exchangeability. The result, which acts as a bridge connecting this new approach with the old, has been referred to by the objectivists as ‘de Finetti’s representation theorem’.

Ah yes, de Finetti’s famous theorem. There are several books and articles that try to explain the relevance of this celebrated theorem: Lindley (2006, pp. 107-109), Diaconis & Skyrms (2018, pp. 122-125), and Zabell (2005; chapter 4, in which he discusses the link with W. E. Johnson, who invented the concept of exchangeability before de Finetti), for instance. One of my resolutions for 2020 is to understand the relevance of this theorem. It obviously ought to be highly relevant, or very smart statisticians would not make such a fuss about it; on the other hand, when I look at the equation it seems to state the obvious — perhaps the theorem is relevant for those who aren’t already devout Bayesians? I guess I’ll find out…

De Finetti continues:

“It follows that all the three proposed definitions of ‘objective’ probability, although useless per se, turn out to be useful and good as valid auxiliary devices when included as such in the subjectivistic theory.

The above-mentioned ‘representation theorem’, together with every other more or less original result in my conception of probability theory, should not be considered as a discovery (in the sense of being the outcome of advanced research). Everything is essentially the fruit of a thorough examination of the subject matter, carried out in an unprejudiced manner, with the aim of rooting out nonsense.

And probably there is nothing new; apart, perhaps, from the systematic and constant concentration on the unity of the whole, avoiding piecemeal tinkering about, which is inconsistent with the whole; this yields, in itself, something new.

Something that may strike the reader as new is the radical nature of certain of my theses, and of the form in which they are presented. This does not stem from any deliberate attempt at radicalism, but is a natural consequence of my abandoning the reverential awe which sometimes survives in people who at one time embraced the objectivistic theories prior to their conversion (which hardly ever leaves them free of some residual).”

More to follow…

References

de Finetti, B. (1974). Theory of Probability, Vol. 1 and 2. New York: John Wiley & Sons.

Diaconis, P., & Skyrms, B. (2018). Ten great ideas about chance. Princeton: Princeton University Press.

Galavotti, M. C. (2005). A philosophical introduction to probability. Stanford: CSLI Publications.

Laplace, P.-S. (1829/1902). A philosophical essay on probabilities. London: Chapman & Hall.

Lindley, D. V. (2006). Understanding uncertainty. Hoboken: Wiley.

Zabell, S. L. (2005). Symmetry and its discontents: Essays on the history of inductive probability. New York: Cambridge University Press.

About The Author

Eric-Jan Wagenmakers

Eric-Jan (EJ) Wagenmakers is professor at the Psychological Methods Group at the University of Amsterdam.