Today I am giving a lecture at the Replication and Reproducibility Event II: Moving Psychological Science Forward, organised by the British Psychological Society. The lecture is similar to the one I gave a few months ago at an ASA meeting in Bethesda, and it makes the case for radical transparency in statistical reporting. The talking points, in order:

- The researcher who has devised a theory and conducted an experiment is probably the galaxy’s most biased analyst of the outcome.

- In the current academic climate, the galaxy’s most biased analyst is allowed to conduct analyses behind closed doors, often without being required or even encouraged to share data and analysis code.

- So data are analyzed with no accountability, by the person who is easiest to fool, often with limited statistical training, who has every incentive imaginable to produce p < .05. This is not good.

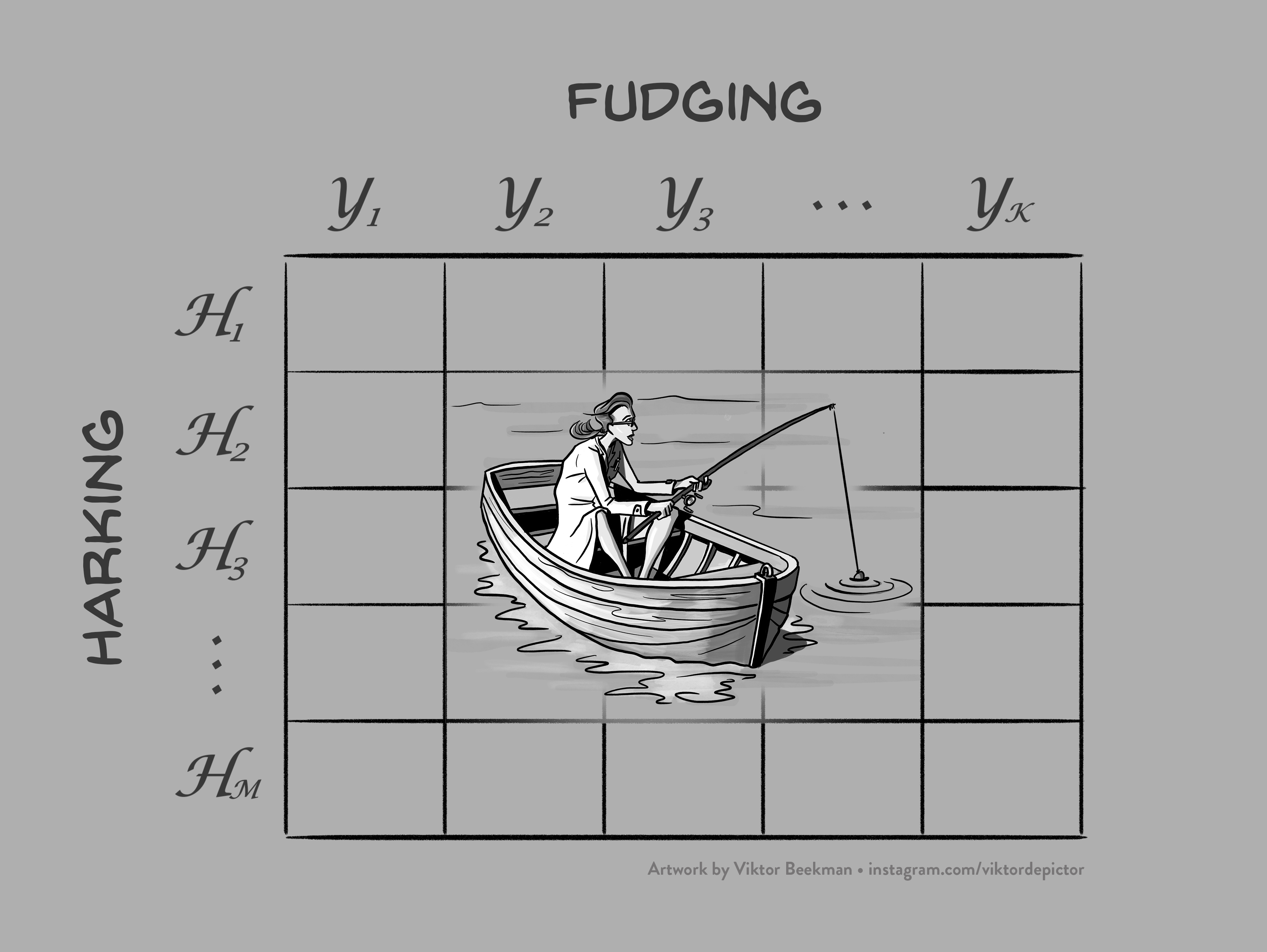

- The result is publication bias, fudging, and HARKing. These again yield overconfident claims and spurious results that do not replicate. In general, researchers abhor uncertainty, and this needs to change.

- There are several cures for uncertainty-allergy, including:

- preregistration

- outcome-independent publishing

- sensitivity analysis (e.g., multiverse analysis and crowd sourcing)

- data sharing

- data visualization

- inclusive inferential analyses

- Transparency is mental hygiene: the scientific equivalent of brushing your teeth, or

washing your hands after visiting the restroom. It needs to become part of our culture, and it needs to be encouraged by funders, editors, and institutes.

The complete pdf of the presentation is here.

Like this post?

Subscribe to the JASP newsletter to receive regular updates about JASP including the latest Bayesian Spectacles blog posts! You can unsubscribe at any time.

About The Author

Eric-Jan Wagenmakers

Eric-Jan (EJ) Wagenmakers is professor at the Psychological Methods Group at the University of Amsterdam.